合 PostgreSQL 11版本基于Pacemaker+Corosync+pcs的高可用搭建

Tags: PG高可用PacemakerpcsCorosync

简介

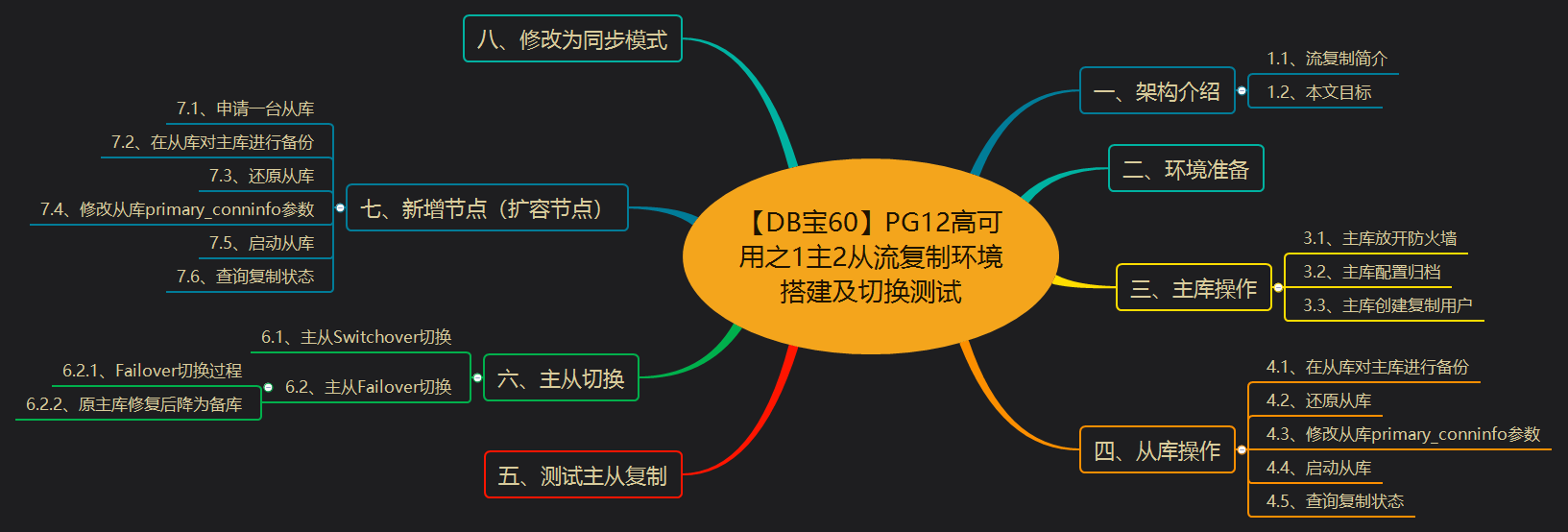

PG常见的高可用软件包括pgpool-II、keepalived、patroni+etcd、repmgr等,架构包括如下几种:

- PG 14 + Pgpool-II + Watchdog 实现高可用(自动故障转移+读写分离+负载均衡)

- PG 14 + Pgpool-II + Watchdog 实现高可用

- PG高可用之主从流复制+keepalived 的高可用

- PG高可用集群之Patroni + etcd + HAProxy + keepalived + Prometheus + Grafana监控 部署

- PostgreSQL高可用之repmgr(1主2从+1witness)+Pgpool-II实现主从切换+读写分离+负载均衡

- 【DB宝61】PostgreSQL使用Pgpool-II实现读写分离+负载均衡

- 【DB宝60】PG12高可用之1主2从流复制环境搭建及切换测试

今天,我们介绍另一种高可用模式,PostgreSQL基于Pacemaker+Corosync+pcs的高可用。Pacemaker用于资源的转移,corosync用于心跳的检测。结合起来使用,实现对高可用架构的自动管理。心跳检测用来检测服务器是否还在提供服务,若出现服务器异常,就认为它挂掉了,此时pacemaker将会对资源进行转移。pcs是Corosync和Pacemaker配置工具。

pacemaker 是Linux环境中使用最为广泛的开源集群资源管理器(Cluster Resource Manager,简称CRM), Pacemaker利用集群基础架构(Corosync或者 Heartbeat)提供的消息和集群成员管理功能,实现节点和资源级别的故障检测和资源恢复,从而最大程度保证集群服务的高可用。是整个高可用集群的控制中心,用来管理整个集群的资源状态行为。客户端通过 pacemaker来配置、管理、监控整个集群的运行状态。

pacemaker 官网地址:https://clusterlabs.org/pacemaker/

pacemaker github:https://github.com/ClusterLabs/pacemaker

Corosync集群引擎是一种群组通信系统(Group Communication System),为应用内部额外提供支持高可用性特性。corosync和heartbeat都属于消息网络层,对外提供服务和主机的心跳检测,在监控的主服务被发现当机之后,即时切换到从属的备份节点,保证系统的可用性。一般来说都是选择corosync来进行心跳的检测,搭配pacemaker的资源管理系统来构建高可用的系统。

pcs是Corosync和Pacemaker配置工具。它允许用户轻松查看,修改和创建基于Pacemaker的集群。pcs包含pcsd(一个pc守护程序),它可作为pc的远程服务器并提供Web UI。全部受控的 pacemaker和配置属性的变更管理都可以通过 pcs实现。

其实,Pacemaker+Corosync+pcs也是用于“在Linux中安装MSSQL 2017 Always On Availability Group”的管理工具,具体可以参考:https://www.dbaup.com/zailinuxzhonganzhuangmssql-2017-always-on-availability-group.html

注意:请先看本文的总结部分!!!

环境介绍

| IP | 端口 | 映射主机端口 | 角色 | OS | 软件 |

|---|---|---|---|---|---|

| 172.72.6.81 | 5432 | 64381 | 主库 | CentOS 7.6 | PG 11.12 + Pacemaker 1.1.23 + Corosync + pcs |

| 172.72.6.82 | 5432 | 64382 | 备库 | CentOS 7.6 | PG 11.12 + Pacemaker 1.1.23 + Corosync + pcs |

| 172.72.6.83 | 5432 | 64383 | 备库 | CentOS 7.6 | PG 11.12 + Pacemaker 1.1.23 + Corosync + pcs |

使用docker快速申请环境,如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | docker rm -f lhrpgpcs81 docker run -d --name lhrpgpcs81 -h lhrpgpcs81 \ --net=pg-network --ip 172.72.6.81 \ -p 64381:5432 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/lhrpgall:2.0 \ /usr/sbin/init docker rm -f lhrpgpcs82 docker run -d --name lhrpgpcs82 -h lhrpgpcs82 \ --net=pg-network --ip 172.72.6.82 \ -p 64382:5432 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/lhrpgall:2.0 \ /usr/sbin/init docker rm -f lhrpgpcs83 docker run -d --name lhrpgpcs83 -h lhrpgpcs83 \ --net=pg-network --ip 172.72.6.83 \ -p 64383:5432 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/lhrpgall:2.0 \ /usr/sbin/init cat >> /etc/hosts <<"EOF" 172.72.6.81 lhrpgpcs81 172.72.6.82 lhrpgpcs82 172.72.6.83 lhrpgpcs83 172.72.6.84 vip-master 172.72.6.85 vip-slave EOF -- 3个节点都需要调整 systemctl stop pg94 pg96 pg10 pg11 pg12 pg13 postgresql-13 systemctl disable pg94 pg96 pg10 pg11 pg12 pg13 postgresql-13 mount -o remount,size=4G /dev/shm |

安装和配置集群

安装依赖包和集群软件并启动pcs

3个节点都操作:

1 2 3 4 5 6 | yum install -y pacemaker corosync pcs systemctl enable pacemaker corosync pcsd systemctl start pcsd systemctl status pcsd |

配置密码

3个节点都操作:

1 | echo "hacluster:lhr" | chpasswd |

集群认证

任意一个节点:

1 2 3 4 | [root@lhrpgpcs81 /]# pcs cluster auth -u hacluster -p lhr lhrpgpcs81 lhrpgpcs82 lhrpgpcs83 lhrpgpcs83: Authorized lhrpgpcs82: Authorized lhrpgpcs81: Authorized |

同步配置

任意一个节点:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | [root@lhrpgpcs81 /]# pcs cluster setup --last_man_standing=1 --name pgcluster lhrpgpcs81 lhrpgpcs82 lhrpgpcs83 Destroying cluster on nodes: lhrpgpcs81, lhrpgpcs82, lhrpgpcs83... lhrpgpcs82: Stopping Cluster (pacemaker)... lhrpgpcs83: Stopping Cluster (pacemaker)... lhrpgpcs81: Stopping Cluster (pacemaker)... lhrpgpcs83: Successfully destroyed cluster lhrpgpcs81: Successfully destroyed cluster lhrpgpcs82: Successfully destroyed cluster Sending 'pacemaker_remote authkey' to 'lhrpgpcs81', 'lhrpgpcs82', 'lhrpgpcs83' lhrpgpcs81: successful distribution of the file 'pacemaker_remote authkey' lhrpgpcs82: successful distribution of the file 'pacemaker_remote authkey' lhrpgpcs83: successful distribution of the file 'pacemaker_remote authkey' Sending cluster config files to the nodes... lhrpgpcs81: Succeeded lhrpgpcs82: Succeeded lhrpgpcs83: Succeeded Synchronizing pcsd certificates on nodes lhrpgpcs81, lhrpgpcs82, lhrpgpcs83... lhrpgpcs83: Success lhrpgpcs82: Success lhrpgpcs81: Success Restarting pcsd on the nodes in order to reload the certificates... lhrpgpcs83: Success lhrpgpcs82: Success lhrpgpcs81: Success |

启动集群

1 2 3 4 5 6 7 | systemctl status pacemaker corosync pcsd pcs cluster enable --all pcs cluster start --all pcs cluster status pcs status crm_mon -Afr -1 systemctl status pacemaker corosync pcsd |

过程:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 | [root@lhrpgpcs81 /]# systemctl status pacemaker corosync pcsd ● pacemaker.service - Pacemaker High Availability Cluster Manager Loaded: loaded (/usr/lib/systemd/system/pacemaker.service; disabled; vendor preset: disabled) Active: inactive (dead) Docs: man:pacemakerd https://clusterlabs.org/pacemaker/doc/en-US/Pacemaker/1.1/html-single/Pacemaker_Explained/index.html ● corosync.service - Corosync Cluster Engine Loaded: loaded (/usr/lib/systemd/system/corosync.service; disabled; vendor preset: disabled) Active: inactive (dead) Docs: man:corosync man:corosync.conf man:corosync_overview ● pcsd.service - PCS GUI and remote configuration interface Loaded: loaded (/usr/lib/systemd/system/pcsd.service; enabled; vendor preset: disabled) Active: active (running) since Thu 2022-05-26 09:17:43 CST; 1min 4s ago Docs: man:pcsd(8) man:pcs(8) Main PID: 1682 (pcsd) CGroup: /docker/fb1552ae5063103b6754dc18a049673c6ee97fb59bc767374aeef2768d3607be/system.slice/pcsd.service └─1682 /usr/bin/ruby /usr/lib/pcsd/pcsd May 26 09:17:42 lhrpgpcs81 systemd[1]: Starting PCS GUI and remote configuration interface... May 26 09:17:43 lhrpgpcs81 systemd[1]: Started PCS GUI and remote configuration interface. [root@lhrpgpcs81 /]# pcs cluster start --all lhrpgpcs81: Starting Cluster (corosync)... lhrpgpcs82: Starting Cluster (corosync)... lhrpgpcs83: Starting Cluster (corosync)... lhrpgpcs81: Starting Cluster (pacemaker)... lhrpgpcs83: Starting Cluster (pacemaker)... lhrpgpcs82: Starting Cluster (pacemaker)... [root@lhrpgpcs81 /]# systemctl status pacemaker corosync pcsd ● pacemaker.service - Pacemaker High Availability Cluster Manager Loaded: loaded (/usr/lib/systemd/system/pacemaker.service; disabled; vendor preset: disabled) Active: active (running) since Thu 2022-05-26 09:19:09 CST; 3s ago Docs: man:pacemakerd https://clusterlabs.org/pacemaker/doc/en-US/Pacemaker/1.1/html-single/Pacemaker_Explained/index.html Main PID: 1893 (pacemakerd) CGroup: /docker/fb1552ae5063103b6754dc18a049673c6ee97fb59bc767374aeef2768d3607be/system.slice/pacemaker.service ├─1893 /usr/sbin/pacemakerd -f ├─1894 /usr/libexec/pacemaker/cib ├─1895 /usr/libexec/pacemaker/stonithd ├─1896 /usr/libexec/pacemaker/lrmd ├─1897 /usr/libexec/pacemaker/attrd ├─1898 /usr/libexec/pacemaker/pengine └─1899 /usr/libexec/pacemaker/crmd May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: Connecting to cluster infrastructure: corosync May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: Quorum acquired May 26 09:19:11 lhrpgpcs81 attrd[1897]: notice: Node lhrpgpcs83 state is now member May 26 09:19:11 lhrpgpcs81 attrd[1897]: notice: Node lhrpgpcs82 state is now member May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: Node lhrpgpcs81 state is now member May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: Node lhrpgpcs82 state is now member May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: Node lhrpgpcs83 state is now member May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: The local CRM is operational May 26 09:19:11 lhrpgpcs81 crmd[1899]: notice: State transition S_STARTING -> S_PENDING May 26 09:19:13 lhrpgpcs81 crmd[1899]: notice: Fencer successfully connected ● corosync.service - Corosync Cluster Engine Loaded: loaded (/usr/lib/systemd/system/corosync.service; disabled; vendor preset: disabled) Active: active (running) since Thu 2022-05-26 09:19:08 CST; 4s ago Docs: man:corosync man:corosync.conf man:corosync_overview Process: 1861 ExecStart=/usr/share/corosync/corosync start (code=exited, status=0/SUCCESS) Main PID: 1869 (corosync) CGroup: /docker/fb1552ae5063103b6754dc18a049673c6ee97fb59bc767374aeef2768d3607be/system.slice/corosync.service └─1869 corosync May 26 09:19:08 lhrpgpcs81 corosync[1869]: [QUORUM] Members[2]: 1 2 May 26 09:19:08 lhrpgpcs81 corosync[1869]: [MAIN ] Completed service synchronization, ready to provide service. May 26 09:19:08 lhrpgpcs81 corosync[1861]: Starting Corosync Cluster Engine (corosync): [ OK ] May 26 09:19:08 lhrpgpcs81 systemd[1]: Started Corosync Cluster Engine. May 26 09:19:08 lhrpgpcs81 corosync[1869]: [TOTEM ] A new membership (172.72.6.81:13) was formed. Members joined: 3 May 26 09:19:08 lhrpgpcs81 corosync[1869]: [CPG ] downlist left_list: 0 received May 26 09:19:08 lhrpgpcs81 corosync[1869]: [CPG ] downlist left_list: 0 received May 26 09:19:08 lhrpgpcs81 corosync[1869]: [CPG ] downlist left_list: 0 received May 26 09:19:08 lhrpgpcs81 corosync[1869]: [QUORUM] Members[3]: 1 2 3 May 26 09:19:08 lhrpgpcs81 corosync[1869]: [MAIN ] Completed service synchronization, ready to provide service. ● pcsd.service - PCS GUI and remote configuration interface Loaded: loaded (/usr/lib/systemd/system/pcsd.service; enabled; vendor preset: disabled) Active: active (running) since Thu 2022-05-26 09:17:43 CST; 1min 29s ago Docs: man:pcsd(8) man:pcs(8) Main PID: 1682 (pcsd) CGroup: /docker/fb1552ae5063103b6754dc18a049673c6ee97fb59bc767374aeef2768d3607be/system.slice/pcsd.service └─1682 /usr/bin/ruby /usr/lib/pcsd/pcsd May 26 09:17:42 lhrpgpcs81 systemd[1]: Starting PCS GUI and remote configuration interface... May 26 09:17:43 lhrpgpcs81 systemd[1]: Started PCS GUI and remote configuration interface. [root@lhrpgpcs81 /]# pcs cluster status Cluster Status: Stack: corosync Current DC: lhrpgpcs81 (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum Last updated: Thu May 26 10:59:02 2022 Last change: Thu May 26 10:58:18 2022 by hacluster via crmd on lhrpgpcs81 3 nodes configured 0 resource instances configured PCSD Status: lhrpgpcs81: Online lhrpgpcs82: Online lhrpgpcs83: Online [root@lhrpgpcs81 /]# pcs status Cluster name: pgcluster WARNINGS: No stonith devices and stonith-enabled is not false Stack: corosync Current DC: lhrpgpcs81 (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum Last updated: Thu May 26 10:59:19 2022 Last change: Thu May 26 10:58:18 2022 by hacluster via crmd on lhrpgpcs81 3 nodes configured 0 resource instances configured Online: [ lhrpgpcs81 lhrpgpcs82 lhrpgpcs83 ] No resources Daemon Status: corosync: active/enabled pacemaker: active/enabled pcsd: active/enabled [root@lhrpgpcs81 /]# crm_mon -Afr -1 Stack: corosync Current DC: lhrpgpcs81 (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum Last updated: Thu May 26 10:59:40 2022 Last change: Thu May 26 10:58:18 2022 by hacluster via crmd on lhrpgpcs81 3 nodes configured 0 resource instances configured Online: [ lhrpgpcs81 lhrpgpcs82 lhrpgpcs83 ] No resources Node Attributes: * Node lhrpgpcs81: * Node lhrpgpcs82: * Node lhrpgpcs83: Migration Summary: * Node lhrpgpcs81: * Node lhrpgpcs82: * Node lhrpgpcs83: |

安装配置PG环境

使用yum安装的pacemaker为1.1.23版本,对于PG来说,最高只支持到PG11。

查看Pacemaker支持的PostgreSQL版本:

1 2 3 4 | [root@lhrpgpcs81 /]# cat /usr/lib/ocf/resource.d/heartbeat/pgsql | grep ocf_version_cmp ocf_version_cmp "$version" "9.3" ocf_version_cmp "$version" "10" ocf_version_cmp "$version" "9.4" |

主库修改参数,开启归档

我的镜像环境已经安装好了PG,所以,不再安装,只需要做基础的配置动作即可,例如防火墙,远程登录等,启动主库:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | vi /pg11/pgdata/postgresql.conf port=5432 注释unix_socket_directories行 wal_level='replica' archive_mode='on' archive_command='test ! -f /pg11/archive/%f && cp %p /pg11/archive/%f' max_wal_senders=10 wal_keep_segments=256 wal_sender_timeout=60s -- 修改权限 chown postgres.postgres -R /pg11 -- 修改 vi /etc/systemd/system/pg11.service User=postgres Environment=PGPORT=5432 systemctl daemon-reload systemctl status pg11 systemctl restart pg11 |

主库创建复制用户

1 2 3 4 5 | su - postgres psql -h /tmp psql -h 192.168.88.35 -p64381 -U postgres create user lhrrep with replication password 'lhr'; |

创建备机节点

在节点2和节点3上操作:

1 2 3 4 | systemctl stop pg11 rm -rf /pg11/pgdata pg_basebackup -h lhrpgpcs81 -U lhrrep -p 5432 -D /pg11/pgdata --wal-method=stream --checkpoint=fast --progress --verbose chown postgres.postgres -R /pg11/pgdata |

停止主库

1 | systemctl stop pg11 |

配置DB集群

配置DB集群

使用root用户执行:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 | cat > lhrpg_cluster_setup.sh <<"EOF" pcs cluster cib pgsql_cfg pcs -f pgsql_cfg property set no-quorum-policy="ignore" pcs -f pgsql_cfg property set stonith-enabled="false" pcs -f pgsql_cfg resource defaults resource-stickiness="INFINITY" pcs -f pgsql_cfg resource defaults migration-threshold="1" pcs -f pgsql_cfg resource create vip-master IPaddr2 \ ip="172.72.6.84" \ nic="eth0" \ cidr_netmask="24" \ op start timeout="60s" interval="0s" on-fail="restart" \ op monitor timeout="60s" interval="10s" on-fail="restart" \ op stop timeout="60s" interval="0s" on-fail="block" pcs -f pgsql_cfg resource create vip-slave IPaddr2 \ ip="172.72.6.85" \ nic="eth0" \ cidr_netmask="24" \ meta migration-threshold="0" \ op start timeout="60s" interval="0s" on-fail="stop" \ op monitor timeout="60s" interval="10s" on-fail="restart" \ op stop timeout="60s" interval="0s" on-fail="ignore" pcs -f pgsql_cfg resource create pgsql pgsql \ pgctl="/pg11/pg11/bin/pg_ctl" \ psql="/pg11/pg11/bin/psql" \ pgdata="/pg11/pgdata" \ config="/pg11/pgdata/postgresql.conf" \ rep_mode="async" \ node_list="lhrpgpcs81 lhrpgpcs82 lhrpgpcs83" \ master_ip="172.72.6.84" \ repuser="lhrrep" \ primary_conninfo_opt="password=lhr keepalives_idle=60 keepalives_interval=5 keepalives_count=5" \ restart_on_promote='true' \ op start timeout="60s" interval="0s" on-fail="restart" \ op monitor timeout="60s" interval="4s" on-fail="restart" \ op monitor timeout="60s" interval="3s" on-fail="restart" role="Master" \ op promote timeout="60s" interval="0s" on-fail="restart" \ op demote timeout="60s" interval="0s" on-fail="stop" \ op stop timeout="60s" interval="0s" on-fail="block" \ op notify timeout="60s" interval="0s" pcs -f pgsql_cfg resource master msPostgresql pgsql \ master-max=1 master-node-max=1 clone-max=5 clone-node-max=1 notify=true pcs -f pgsql_cfg resource group add master-group vip-master pcs -f pgsql_cfg resource group add slave-group vip-slave pcs -f pgsql_cfg constraint colocation add master-group with master msPostgresql INFINITY pcs -f pgsql_cfg constraint order promote msPostgresql then start master-group symmetrical=false score=INFINITY pcs -f pgsql_cfg constraint order demote msPostgresql then stop master-group symmetrical=false score=0 pcs -f pgsql_cfg constraint colocation add slave-group with slave msPostgresql INFINITY pcs -f pgsql_cfg constraint order promote msPostgresql then start slave-group symmetrical=false score=INFINITY pcs -f pgsql_cfg constraint order demote msPostgresql then stop slave-group symmetrical=false score=0 pcs cluster cib-push pgsql_cfg EOF |

执行该shell脚本,执行完会产生pgsql_cfg的配置文件:

1 2 | chmod + lhrpg_cluster_setup.sh sh lhrpg_cluster_setup.sh |